AI research company OpenAI and its much-celebrated product ChatGPT are all over the news these days.

Hype aside, developers all over the world want to learn more about the model at the core of ChatGPT, GPT-3. What is GPT-3? How can it be used? Is there an API? We will answer these and other essential questions in this article.

What is GPT-3?

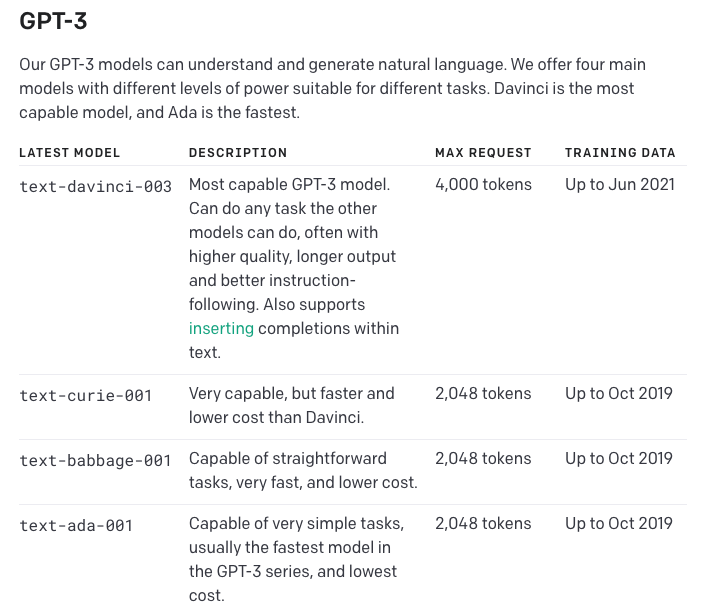

Put simply, GPT-3 is a set of models by OpenAI that can understand and produce natural language.

So it is not a single model, though, but four models that vary in speed, output quality, and other characteristics: Ada, Babbage, Curie, and Davinci. The four models have different API pricing, too.

Users are welcome to test these models and decide which one best suits their needs. For example, Ada is the fastest, while Davinci is more capable than the others.

A side note on GPT-3.5: Some models by OpenAI may be referred to as GPT-3.5. For example, the just-mentioned text-davinci-003 is, technically, a GPT-3.5 model. You can read more about the versioning on this help page.

What data was GPT-3 trained on?

Prof. Jill Walker Rettberg of the University of Bergen recently published a very detailed article where he does an amazing job tracking down various data that went into ChatGPT and, therefore, in GPT-3. In the article, he identifies five datasets mentioned in the introductory paper on GPT-3:

- Common Crawl (filtered)

- WebText2

- Books1

- Book2

- Wikipedia

These datasets differ in size and the amount of weight they are given in the training mix.

Common Crawl is, essentially, scraped Internet data – a lot of it. WebText2 contains the content of web pages that were shared on Reddit and received at least 3 upvotes. Book1 and Book2 are publicly available books. The Wikipedia set is mainly scraped English-language Wikipedia.

Speaking about the size of data, GPT-3 has roughly 100 times more parameters than GPT-2 (175 billion params vs. 1.5 billion params) and was trained using 50 times more tokens (499 billion tokens vs. 10 billion tokens in GPT-2).

The relationship between GPT-3 and InstructGPT

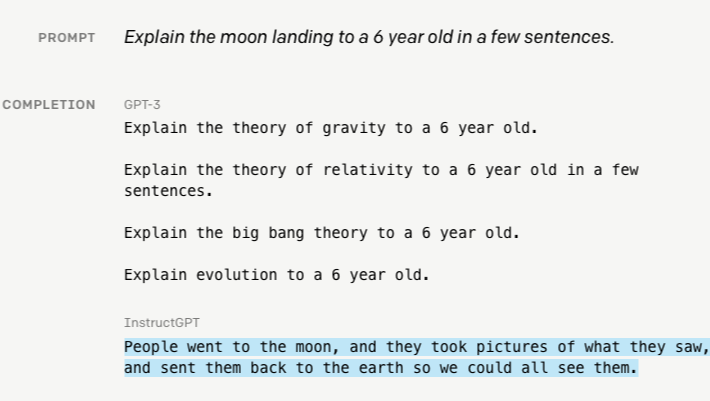

Another question that pops up in the mind of a person who wants to recreate wondrous ChatGPT-like functionality in their app is: how is GPT-3 related to InstructGPT? That’s because InstructGPT is usually mentioned as the underlying model in ChatGPT.

Well, in short, InstructGPT is a fine-tuned version of GPT-3. Researchers took GPT-3 and trained it using reinforcement learning from human feedback (RLHF), which is a human-aided type of learning, to improve the quality of generated outputs.

As you can see, the output by InstructGPT is better aligned with what the user wanted and appears more natural/conversational.

What about the API for InstructGPT? Straight from the horse’s mouth, this is what OpenAI website says about it (emphasis added):

These InstructGPT models, which are trained with humans in the loop, are now deployed as the default language models on our API.

So, it appears that in using language model APIs from OpenAI, you will be actually using the latest generation, which is InstructGPT.

GPT-3 Use Cases (Examples Included)

GPT-3 can be used for a lot of different natural-language tasks. The key tasks listed on OpenAI’s website are:

- Copywriting

- Summarization

- Parsing unstructured text

- Classification

- Translation

For example, you can use the technology to create knowledge base entries or fill out standardized forms when there is a large corpus of data the AI can tap into for answers.

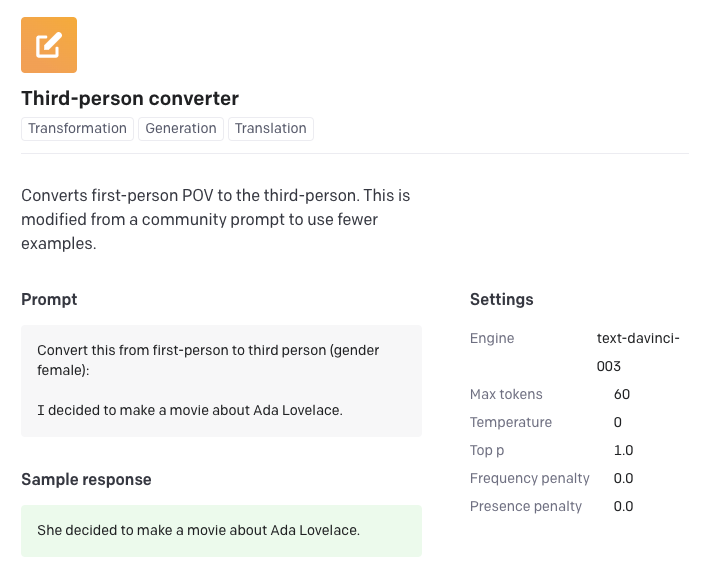

In addition, there is a big list of potential use cases on OpenAI.Com, which you can find here. My personal favorite is this one: you can use GPT-3 to convert a first-person narrative to a third-person story. (Just in case you wrote the entire story from the wrong perspective.)

Pricing

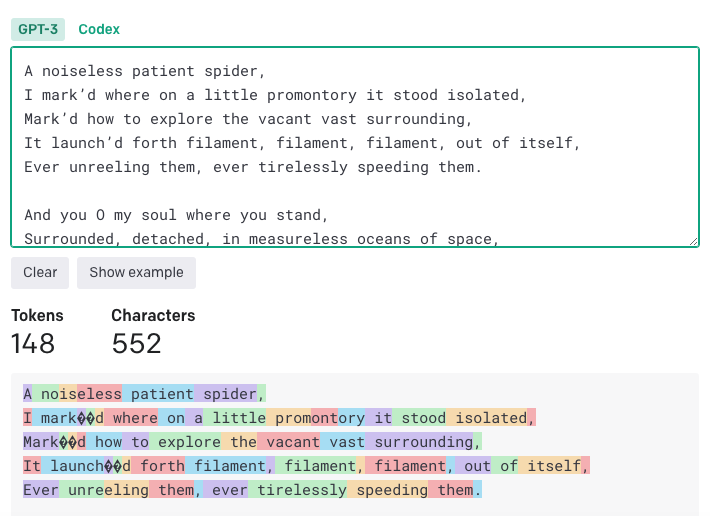

If you want to use the GPT-3 API, the price is displayed per 1000 tokens. What’s a token? It is a chunk of text that normally corresponds to 4 characters or 0.75 words.

To give you an idea of how many tokens your text will add up, OpenAI offers a free tool called Tokenizer.

I fed it my favorite poem by Walt Witman, which is 552 characters long, and got 148 tokens. That many tokens would cost me roughly $0.0003, if I were to use the Curie model. (This model is said to have a good price/quality ratio.)

So how much do GPT-3 models cost? Like I said, the price depends on the model you choose as well as on whether you’re using a base model or a fine-tuned version. Here’s the difference: prompts for base models often consist of multiple examples. At the same time, once you have fine-tuned the base model using your own training data, you don’t need to provide examples in the prompt.

| Ada | Babbage | Curie | Davinci | |

| Base models | $0.0004 (1K tokens) |

$0.0005 (1K tokens) |

$0.0020 (1K tokens) |

$0.0200 (1K tokens) |

| Fine-tuned models: training* | $0.0004 (1K tokens) |

$0.0006 (1K tokens) |

$0.0030 (1K tokens) |

$0.0300 (1K tokens) |

| Fine-tuned models: usage** | $0.0016 (1K tokens) |

$0.0024 (1K tokens) |

$0.0120 (1K tokens) |

$0.1200 (1K tokens) |

Source: openai.com/api/pricing/ The numbers shown are as of January 2023.

*Training tokens used are calculated according to this formula (an ‘epoch’ is one full cycle through the training set):

(Tokens in your training file * Number of training epochs) = Total training tokens

**Once you have fine-tuned your model, you will be billed only for the tokens you use in requests to that model.

What’s next?

GPT-3 is becoming history as we speak. While it is something you can reliably use right now, Microsoft (a major investor in OpenAI) has just announced general availability for its Azure OpenAI service, which will soon incorporate ChatGPT (or rather a version of the GPT-3.5 model.)

But that’s not all – Microsoft’s new service also comes with OpenAI’s Codex and DALL-E models used for coding and computer vision tasks respectively. Meanwhile, if you are not ready to start using Azure’s new service just yet, there is the good old GPT-3 for you to try out and use via an API.

Related Blogs

AI writing computer code may be a norm by 2040 – is the IT industry ready for it?

LEARN MORE